What is Google’s nano banana and where to try it

At AI Flow Chat

Topics covered:

Contents

0%The reveal

For months, a mystery model called nano banana quietly dominated LMArena’s image-edit arena. The community swapped theories, posted banana emojis, and tested it obsessively. On August 26, Google DeepMind confirmed the rumor that nano-banana is the codename for Gemini 2.5 Flash Image. The announcement on the Google Developers Blog officially linked the model to its codename and provided pricing, examples, and a code sample.

The stakes are real. Creators, marketers, designers, and product teams have been asking for speed, control, and consistency, not just the ability to generate pretty pictures. Gemini 2.5 Flash Image aims squarely at those needs with identity consistency, targeted edits by prompt, multi-image fusion, and low latency that feels interactive. Leaderboards are snapshots, not verdicts, but as of August 26, 2025, LMArena’s image-edit board showed why people noticed this model first.

Summary

Nano banana is Google’s Gemini 2.5 Flash Image, which is now in preview. It stands out for keeping characters on-model across scenes, performing precise local and global edits from natural-language prompts, fusing multiple images coherently, applying templates reliably, and answering edits with low latency. You can try it today in the consumer-facing Gemini app and build with it via Google AI Studio, the Gemini API, and Vertex AI, with additional access through OpenRouter and fal.ai. Watermarking varies by surface: the Gemini app shows a visible watermark, while AI Flow Chat outputs have no visible watermark; in both cases an invisible SynthID mark remains.

If you just want an easy platform to try nano banana for free, head to AI Flow Chat.

When you use nano banana inside the Gemini app, Google overlays a visible watermark. On AI Flow Chat, there is no visible watermark on outputs. The invisible SynthID provenance mark still remains.

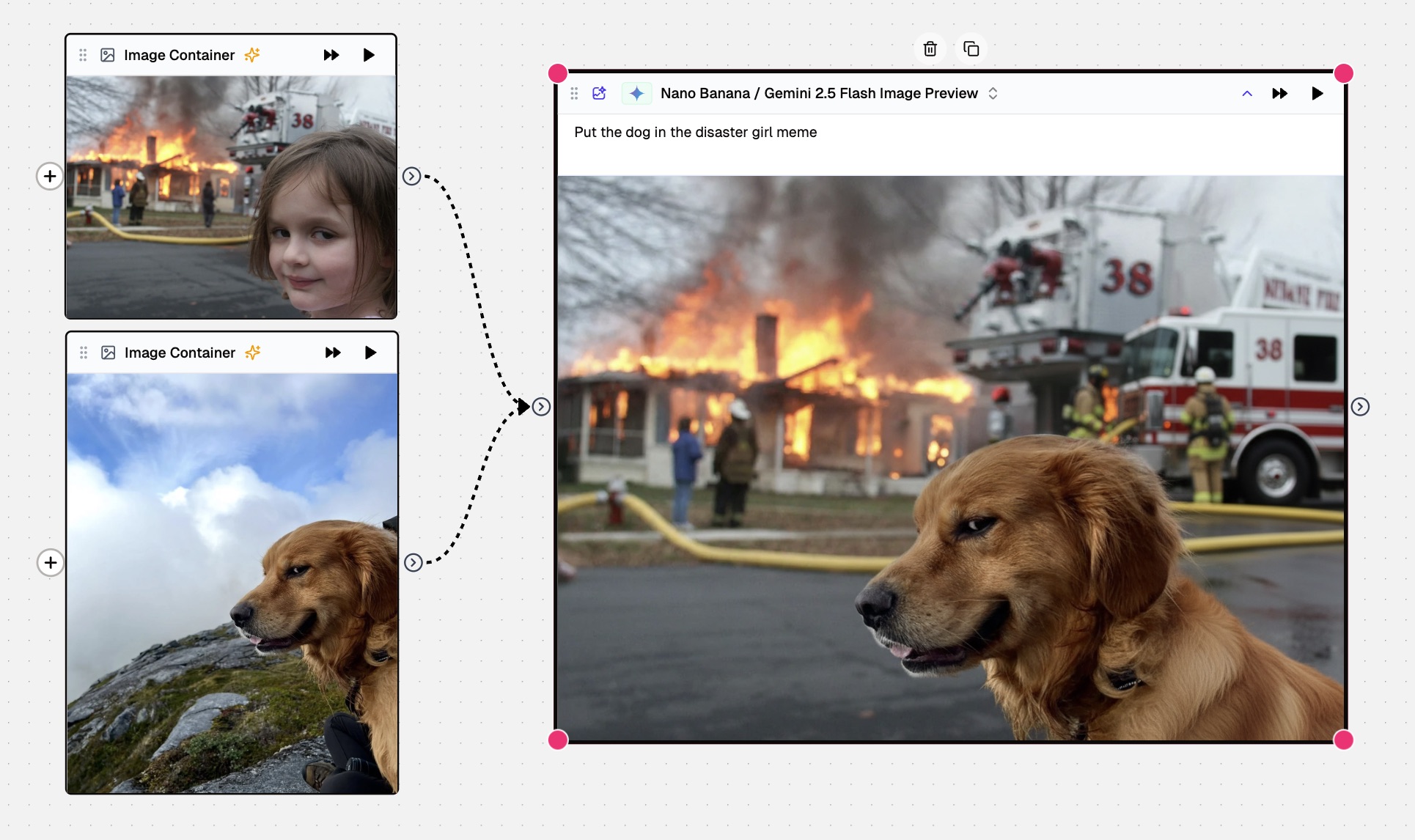

Photoshop a dog in place of the girl using nano banana on AI Flow Chat

What is nano banana?

Nano banana is the internal alias for Gemini 2.5 Flash Image, Google’s state-of-the-art image generation and editing system. It is designed to pair speed and cost-efficiency with better image quality and creative control. In Google’s announcement post, Introducing Gemini 2.5 Flash Image, aka nano-banana, the team frames the model as an answer to two major requests from developers following Gemini 2.0 Flash: higher-quality images and more powerful, controllable edits.

It is available right now for developers through the Gemini API and Google AI Studio and for enterprises in Vertex AI. It also appears in the consumer-facing Gemini app’s updated native image editing, which brings the model’s consistency and blending capabilities to everyday photo workflows.

Key differences and capabilities

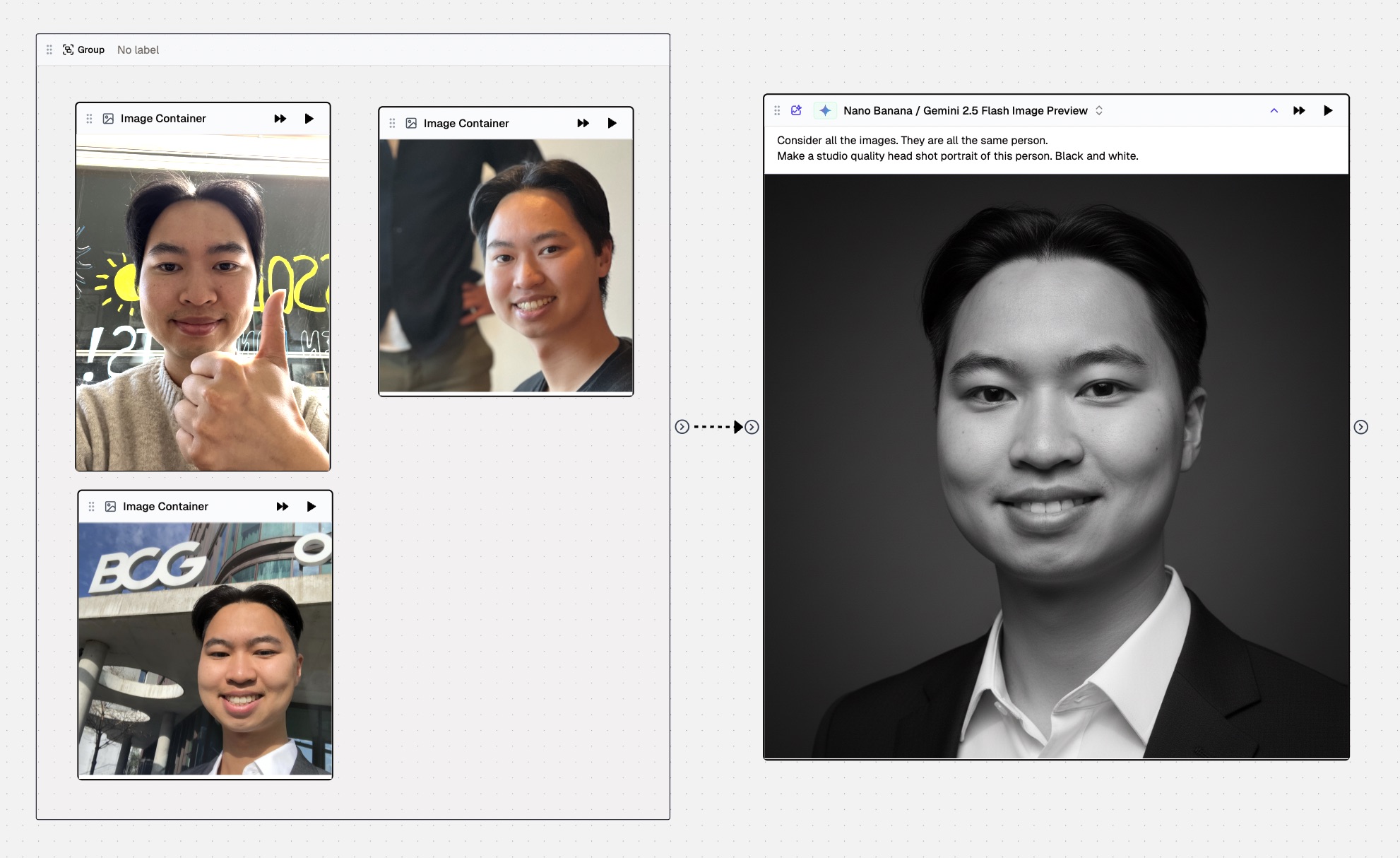

The headline difference is consistency. With prior image systems, a character might subtly change each time you switch backgrounds or camera angles. Gemini 2.5 Flash Image is trained to maintain identity, including details like skin tone, facial structure, pet markings, and product details, across edits and generations. In practice, that means storyboards where the hero stays recognizable or product visuals that look like the product you actually sell.

The second shift is targeted, prompt-based editing. Instead of painting masks and managing layers, you can describe the change you want. For example, you can use prompts to blur the background while keeping the subject sharp, or to remove a stain on a shirt and add soft window light from the left. The model handles local and global edits, reducing hand-drawn selections to natural language.

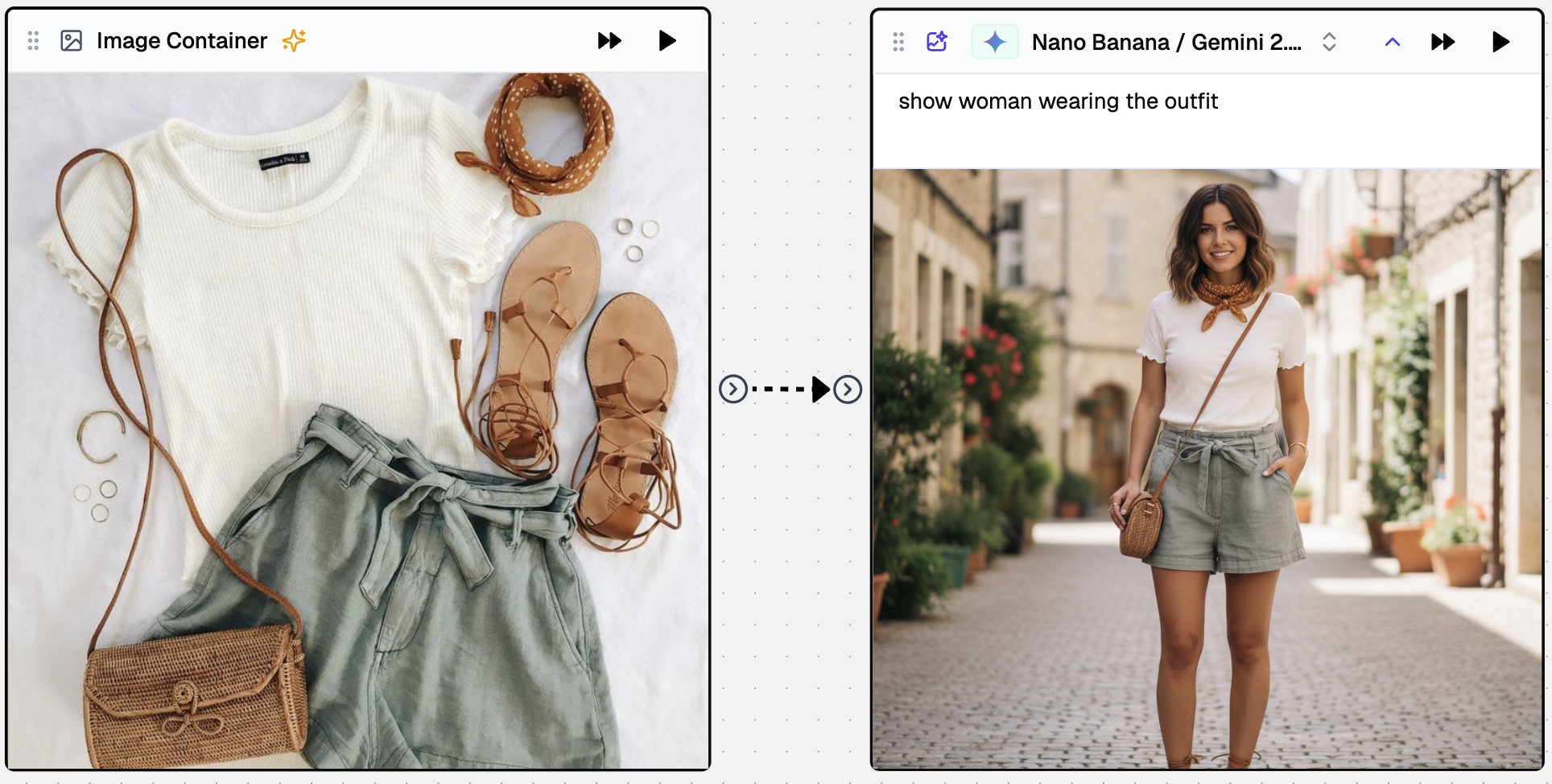

Third, multi-image fusion lets you merge inputs. You can drag a chair into a new living room and restyle the upholstery to match a reference fabric, or blend two portraits into a single scene with lighting that makes sense. Template adherence is another practical win; if you provide a layout, such as an employee badge or a product card, the model aims to keep outputs on-brand and in the right places.

Under the hood, the model benefits from Gemini’s world knowledge, which helps it interpret diagrams, follow semantically complex instructions, and keep details grounded. Because it’s part of the Flash family, responses arrive quickly enough to feel interactive, which changes how you iterate to be more conversational, incremental, and forgiving.

Make professional headshot portraits with nano banana on AI Flow Chat

Capabilities in action

Imagine you are building a brand story around a recurring character. You start with a headshot and ask the model to place them in a sunlit café, then a rainy street, then a vintage train car. The clothing can change, the lighting can evolve, and the angle can shift, but the character’s face remains recognizably the same. This removes a lot of friction from episodic content and serialized campaigns.

Now pivot to edits. You can upload a product photo and ask the model to remove the crease on the label, nudge the bottle to the right, and replace the background with a soft gradient from teal to white. You do not need to draw a mask or build a layer stack, you just describe the outcome. The model applies local retouching and global layout changes in one pass.

Switch to an educational moment. You can sketch a rough circuit diagram or a skeletal system on a whiteboard and ask the model to annotate it, highlight misconceptions, and render a cleaned-up version. Thanks to world knowledge, the system can read your scrawl, identify components, and produce an accurate, legible image. This is the idea behind AI Studio’s CoDrawing template, which turns a simple canvas into an interactive tutor.

Next, try multi-image fusion. You can snap a photo of an empty living room, then upload a sofa, rug, and lamp from your catalog. A prompt can direct the model to place the sofa along the back wall, scale the rug to fit under the coffee table, and match the lamp’s metal to brushed brass. The model composes a coherent scene with consistent lighting and shadows, which is useful for instant staging or catalog composites.

Finally, you can walk through multi-turn edits. Starting with an unfurnished room, you can ask it to paint the walls a muted sage. After reviewing, you can add a walnut bookshelf and a reading chair by the window. In a final step, you could replace the chair fabric with a boucle texture. Each turn preserves what you like and changes only what you requested. This creates a conversational editing loop that feels closer to directing a design assistant than pushing pixels.

Generate an AI model wearing your outfit on AI Flow Chat

Real-world use cases

E-commerce teams can stage products in diverse, on-brand environments without scheduling shoots for every variant. A single packshot becomes a family of lifestyle scenes, with consistent labels and materials preserved across angles. Marketers can build ad sets that stay true to a hero character or a mascot, so stories feel continuous across channels.

Interior designers and real estate marketers can mock up rooms from multiple reference images, fusing a client’s preferred textures and pieces into photorealistic previews. Comic and storyboard artists can rough out scenes with consistent characters and iterate panel-by-panel using prompts instead of manual masking. Educators can turn hand-drawn diagrams into clear illustrations and then style them for different grade levels.

Game studios can generate aligned NPC portraits that share a style and world logic, speeding up preproduction. Social teams can composite quick posts that keep people and pets recognizable from one meme to the next. Consistency is improved, not perfect, so iteration still matters, but the gaps are narrower and the feedback loop is faster.

Availability, Pricing, and Guidelines

For consumers, the fastest path is the Gemini app’s updated native image editing. Google’s Keyword post explains how you can keep your likeness while changing outfits or locations, blend multiple photos into one scene, and perform multi-turn edits, all inside the app. If you have wished photo editing were as simple as describing the change, this is your on-ramp.

For rapid prototyping, start in Google AI Studio. Build Mode now includes four remixable template apps that demonstrate core capabilities: Past Forward for character consistency, PixShop for prompt-based photo editing, CoDrawing for world-knowledge tutoring on a blank canvas, and Home Canvas for multi-image fusion and room restyling. Each template can be customized in minutes and shared or exported to code.

Developers can call the Gemini API for image generation and editing using the model ID gemini-2.5-flash-image. The docs show how to send text-plus-image inputs and receive images as output, with low-latency feedback that supports interactive UIs. Enterprises can access the model in Vertex AI with governance and integration tools familiar to GCP teams. If you prefer third-party hubs, Gemini 2.5 Flash Image is also available via OpenRouter and fal.ai. These are official partner listings. Community-run hubs like Pollo AI may expose the model, but they are not official channels, and availability or quotas can change without notice.

Google prices Gemini 2.5 Flash Image at $30 per 1 million output tokens. Each generated image is billed as 1,290 output tokens, which translates to approximately $0.039 per image. Other modalities on input and output follow Gemini 2.5 Flash pricing, so check the live pricing page before you move to production, especially if your workflow mixes text and image outputs.

No model is flawless. Fine typographic elements can still wobble, long strings of text may need retries, and tricky poses might stray from your intent. You will occasionally see over-smoothing or small artifacts. Google’s roadmap calls out improvements to long-form text rendering, consistency reliability, and fine details.

Watermarking differs by surface. In the Gemini app, images include a visible watermark, and all outputs carry an invisible SynthID digital watermark so they can be identified as AI-generated or edited. When you use the model via the API (including on AI Flow Chat), there is no visible watermark; the invisible SynthID mark remains. SynthID’s approach is documented by Google DeepMind and is designed to persist through common transformations.

Respect IP and privacy. Do not upload images you do not have rights to edit, and avoid depicting private individuals in sensitive contexts without consent. If you are disclosing AI assistance, note that images include an invisible SynthID mark by default, and a visible watermark when using the Gemini app. Platform policies apply across the Gemini app, AI Studio, and the API, so review them before launching a public tool.

Getting started and prompting tips

On AI Flow Chat, create a new app, add an Image Generator node, and select the model “Nano Banana / Gemini 2.5 Flash Image Preview” the node settings. Upload your image(s) or paste references, then use natural-language prompts for local or global edits. Connect the output to a preview or save node to capture results. See the AI Flow Chat docs at /docs for node-based flows and image nodes.

In Gemini app, upload a photo. Ask for a location change while keeping the subject the same, then refine the image with prompts for softer morning light, a warm film grain, or to keep the original eye color. The back-and-forth demonstrates the model’s low latency, allowing you to steer the edit conversationally.

In Google AI Studio, launch a template like Past Forward and swap in your own character references. Tweak prompts for art direction, then switch to PixShop to test targeted retouching on your product photos. Move to CoDrawing to see how the model reads your diagrams, then finish in Home Canvas by dragging furniture into a room and fusing them into a photoreal result.

When you are ready to code, the Gemini API takes text plus image inputs and returns images in the response. You can pass inline image data or file references, then save the resulting image to your app. The model name to use is gemini-2.5-flash-image.

Code sample (Python):

from google import genai

from io import BytesIO

from PIL import Image

client = genai.Client()

prompt = "Blend these: move the red armchair into the living room and match lighting; keep the rug unchanged."

room = Image.open("living_room.jpg")

chair = Image.open("red_chair.png")

resp = client.models.generate_content(

model="gemini-2.5-flash-image",

contents=[prompt, room, chair],

)

for part in resp.candidates[0].content.parts:

if part.inline_data:

out = Image.open(BytesIO(part.inline_data.data))

out.save("fusion_result.png")

If you need governance, quotas, and enterprise controls, deploy in Vertex AI. You get the same core capabilities with IAM, monitoring, and integration into your existing GCP stack.

Be explicit about what should not change. Start prompts by stating what to keep, such as the subject’s face and clothing or the label text and bottle shape. Then, describe the target edit and where it applies in one instruction, for example, to replace the background with a twilight cityscape while keeping the subject’s lighting consistent.

Reference images help with style and texture transfer. Attach the pattern, fabric, or color palette you want and instruct the model to apply the fabric to the sofa while keeping the scale realistic. Treat the conversation as a sequence of small, reversible steps, as multi-turn edits are the fastest way to converge on a precise look. For brand pipelines, pair a reusable template prompt with a seed set of reference images so on-brand outputs are repeatable.

How it compares to traditional editing tools

The post-Photoshop claim is easy to overstate. Natural-language, multi-turn editing changes the workflow, allowing you to get 80% of many edits done with one instruction and polish the result interactively. However, pixel-perfect retouching, complex typography, intricate layer compositing, and print-ready color management are still best handled by traditional tools.

The right approach is complementary. Use Gemini 2.5 Flash Image to ideate, maintain identity across scenes, and handle targeted changes without masks. When you need exact kerning, CMYK proofing, or micro-level cleanup, you can hand off the image to Photoshop, Illustrator, or your preferred editor. This process allows you to ship faster while keeping control where it matters.

Roadmap and community

The model is in preview, and Google notes that stability and quality will improve in the coming weeks. The team is actively working on long-form text rendering, even more reliable character consistency, and fine detail fidelity. If you run into edge cases, share feedback in the developer forum or on X via the Google AI Studio account. And remember the LMArena context: as of August 26, 2025, nano banana topped the image-edit board, but leaderboards shift as models iterate.

FAQ

Where can I use nano banana? Start with AI Flow Chat. It's the easiest way to try nano banana for free in a no-setup web app. You can also use it in the consumer Gemini app, build with it in Google AI Studio, integrate via the Gemini API, deploy on Vertex AI, or access it through partners like OpenRouter and fal.ai.

Is nano banana officially from Google? Yes. The Google Developers Blog announced Gemini 2.5 Flash Image, also known as nano-banana, which confirmed the alias.

Is it free? You can try it for free in Google AI Studio’s Build Mode and in the Gemini app depending on your region and account, but API and production usage are billed. The model’s image output pricing is $30 per 1M output tokens; each image counts as 1,290 tokens (~$0.039).

Can I use it commercially? Yes, through the Gemini API and Vertex AI, subject to Google’s terms and your use case. Check licensing and policy details before deployment.

Does identity consistency always hold? It is much improved but not perfect. Complex poses, long edit chains, or ambiguous prompts may need iteration.

Can AI edits be detected? Images include an invisible SynthID watermark by default, and a visible watermark when using the Gemini app.

Resources and links

- Introducing Gemini 2.5 Flash Image (aka nano-banana) on the Google Developers Blog

- Image editing in the Gemini app (Google Keyword post)

- LMArena image-edit leaderboard

- Mashable coverage confirming the alias and describing real-world editing behavior

- Google AI Studio — Build Mode and template apps:

- Gemini API docs (image generation and editing)

- Vertex AI Studio model page (enterprise access)

- Partner access on OpenRouter

- Partner access on fal.ai

- SynthID (invisible watermarking)

Continue Reading

Discover more insights and updates from our articles

Google Mixboard alternative: AI Flow Chat builds automated, production-ready AI workflows and shareable apps with multi-model support, scheduling, and global availability.

Learn essential strategies for developing reliable, scalable AI applications that deliver consistent value to users.

Learn how AI avatars are revolutionizing business communication.